usc research

Overview

During the summer of my junior year, I had the opportunity to participate in some machine learning research alongside graduate students at the University of Southern California. We were testing the effectiveness of different algorithms in different mujoco environments. For the most part we were working with reinforcement learning based on Q-Tables, which assigns scores to every iteration of a simulation. It aims to use those scores to “learn” an optimal solution to the task at hand.

Procedure

We used Python, the standard language to do ML stuff in. I did all my work in the PyCharm IDE, and used the NumPy and PyTorch Libraries. NumPy is a library that adds more math functions, and PyTorch is a library specifically for machine learning, specifically processing and development.

We trained the models using Reinforcement Learning(RL), which would assign a score based on the effectiveness of every round of training. These scores would be stored in something called a Q-Table. The goal was that the machine would learn which rounds scored the highest, and then use those as a reference for following runs, eventually learning the best.

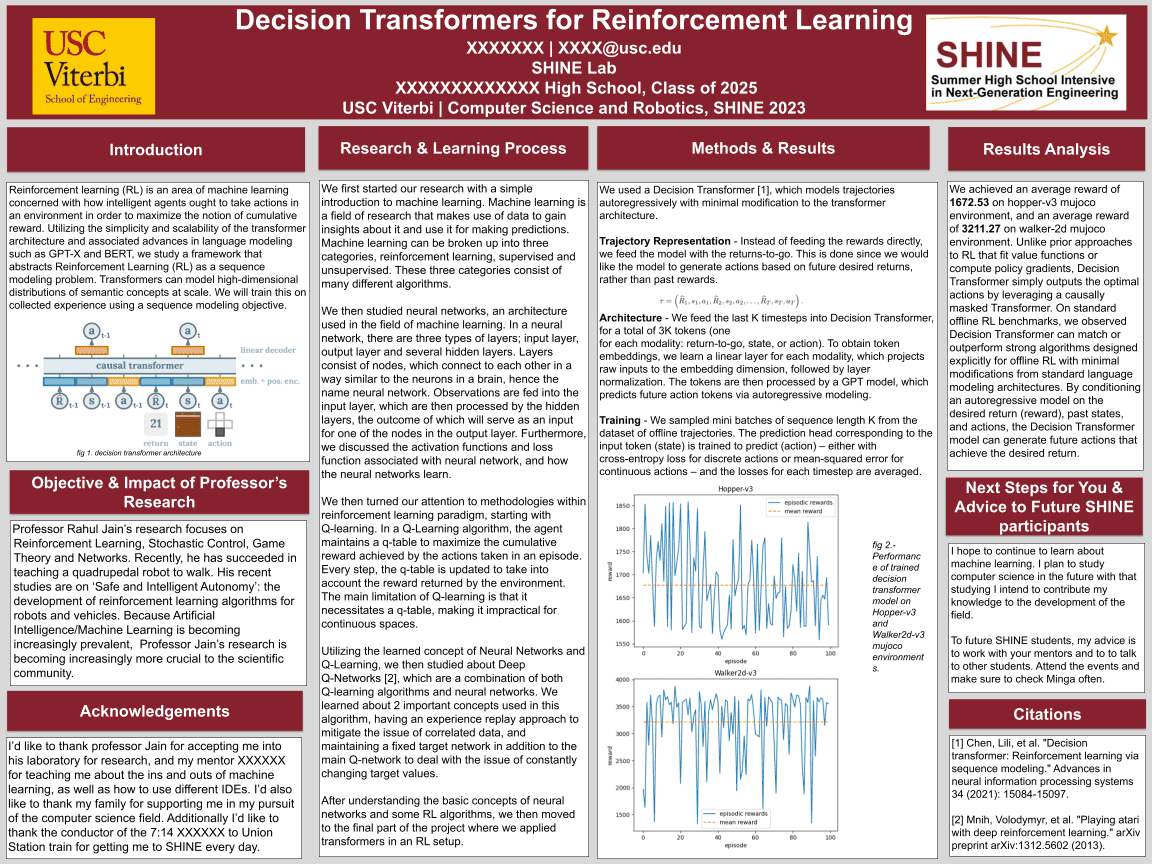

Results

The two environments that we recorded results for were Hopper-V3 and Walker2D-V3. Hopper didn’t work as well as we had hoped, but Walker would consistently get higher scores, only occasionally regressing to lower scores. Ideally, I would be able to go back and tweak my code so that I could try and get Hopper-V3 to work properly, but since the research was done over the summer, I had to go back to school :(